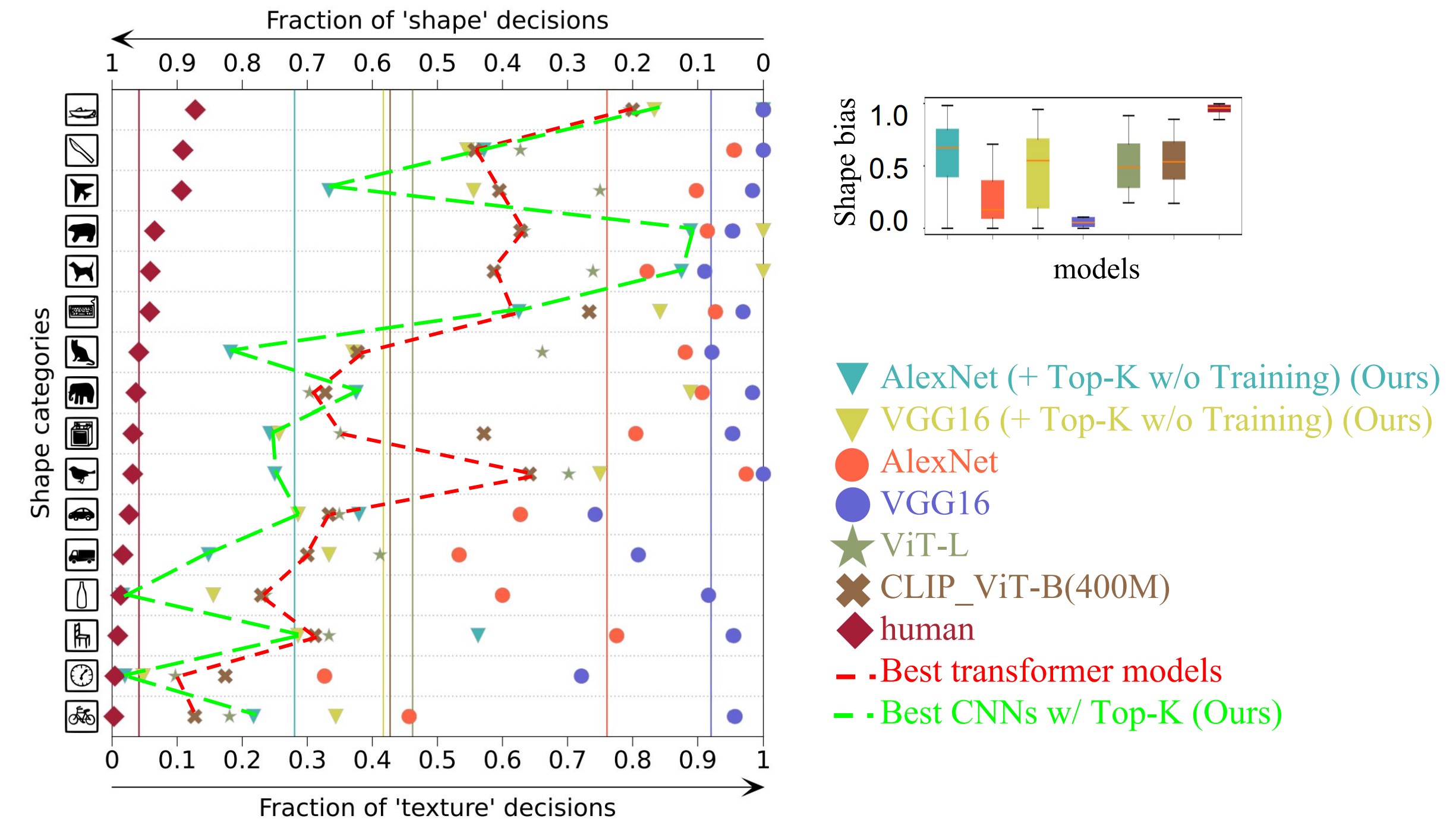

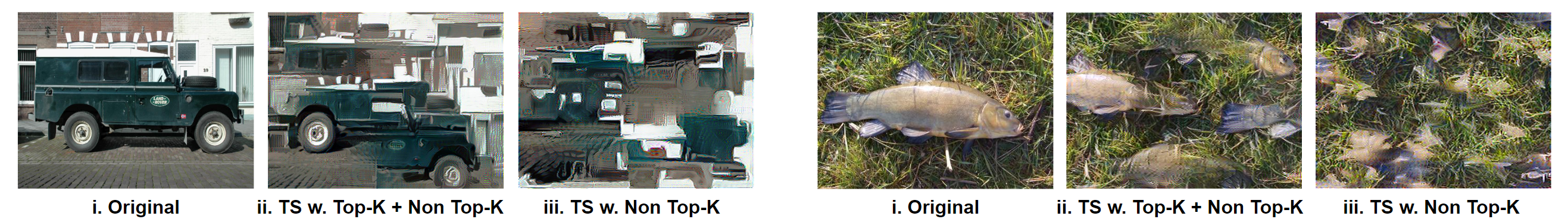

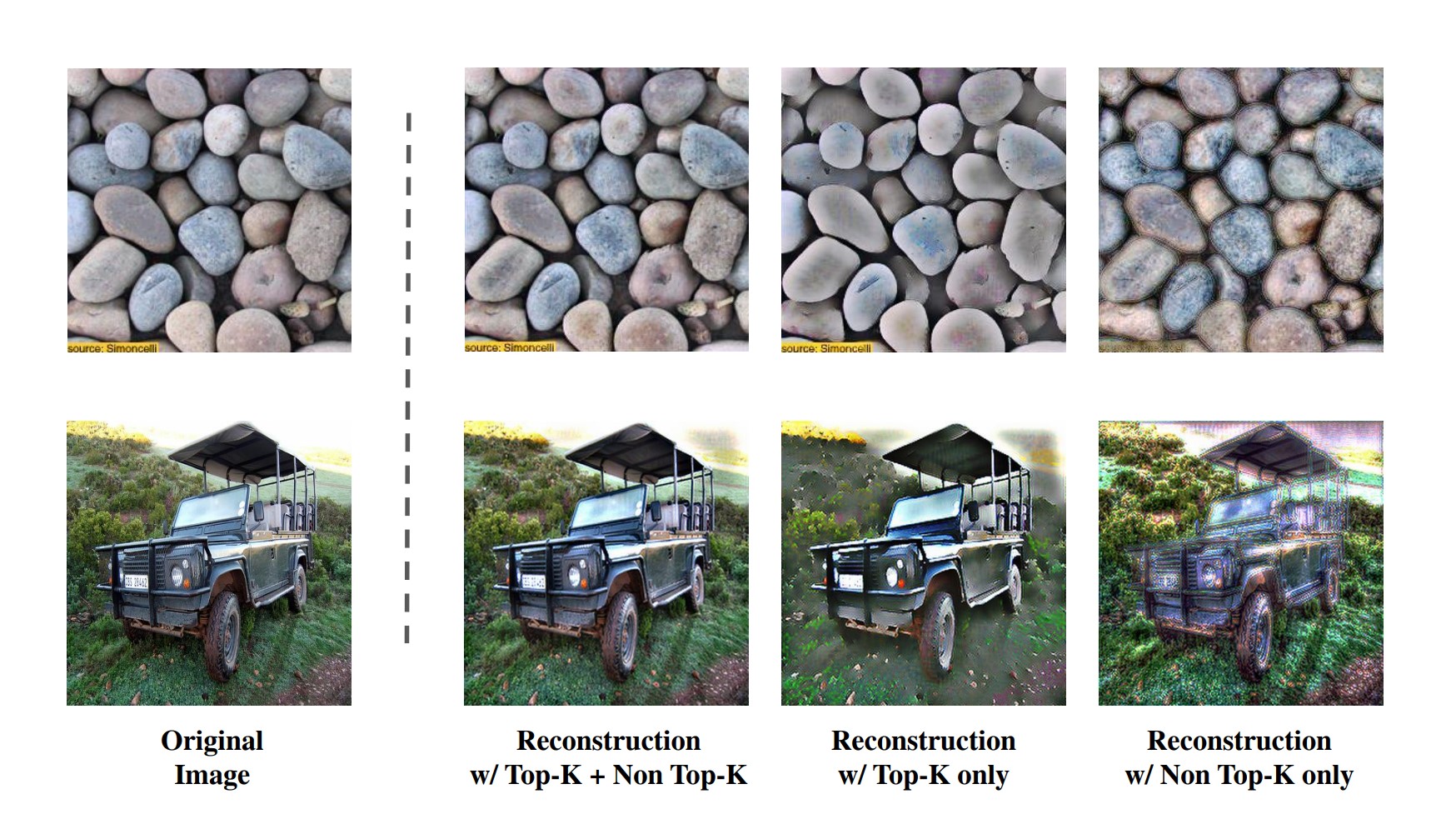

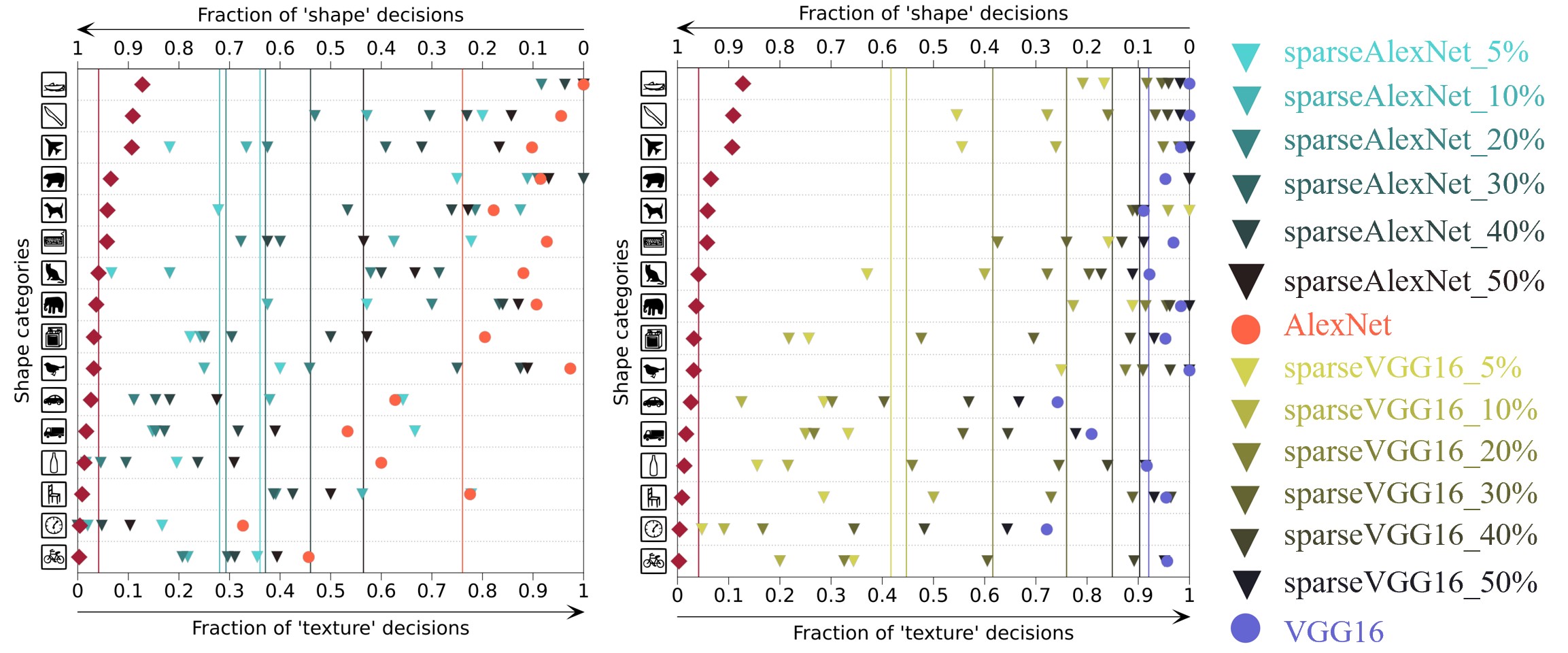

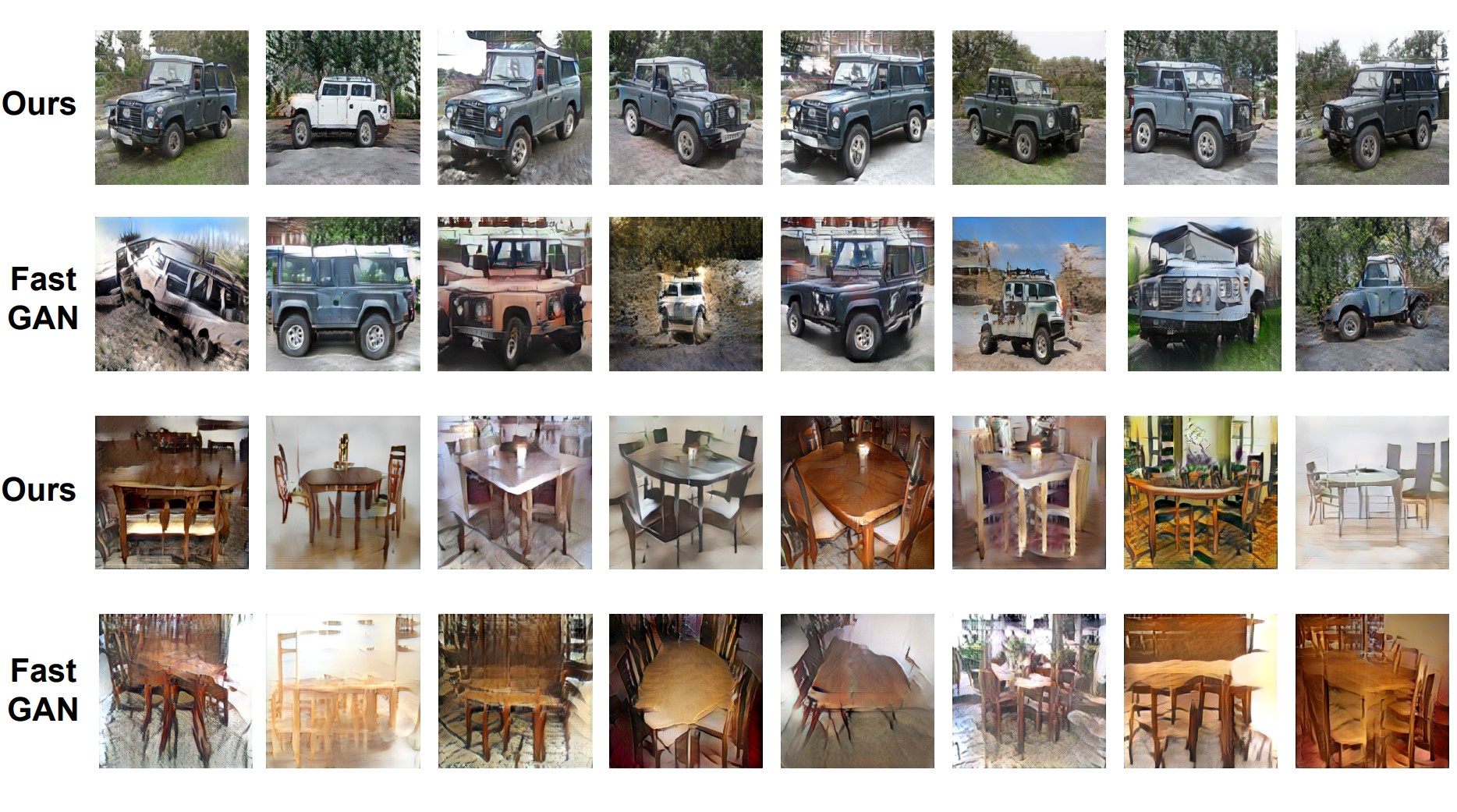

Current deep-learning models for object recognition are known to be heavily biased toward texture. In contrast, human visual systems are known to be biased toward shape and structure. What could be the design principles in human visual systems that led to this difference? How could we introduce more shape bias into the deep learning models? In this paper, we report that sparse coding, a ubiquitous principle in the brain, can in itself introduce shape bias into the network. We found that enforcing the sparse coding constraint using a non-differential Top-K operation can lead to the emergence of structural encoding in neurons in convolutional neural networks, resulting in a smooth decomposition of objects into parts and subparts and endowing the networks with shape bias. We demonstrated this emergence of shape bias and its functional benefits for different network structures with various datasets. For object recognition convolutional neural networks, the shape bias leads to greater robustness against style and pattern change distraction. For the image synthesis generative adversary networks, the emerged shape bias leads to more coherent and decomposable structures in the synthesized images. Ablation studies suggest that sparse codes tend to encode structures, whereas the more distributed codes tend to favor texture.

The classification result on the Shape Bias Benchmark. This plot shows the shape bias of sparse CNNs, CNNs and humans on different class in texture-shape cue conflict dataset. It also show the shape bias of different sparsity degree. e.g. 5% means that only top 5% activation value would be passed to the next layer. Vertical lines means the average value.

@article{li2023emergence,

author = {Li, Tianqin and Wen, Ziqi and Li, Yangfan and Lee, Tai Sing},

title = {Emergence of Shape Bias in Convolutional Neural Networks through Activation Sparsity},

journal = {NeurIPS},

year = {2023},

}